Featured

Table of Contents

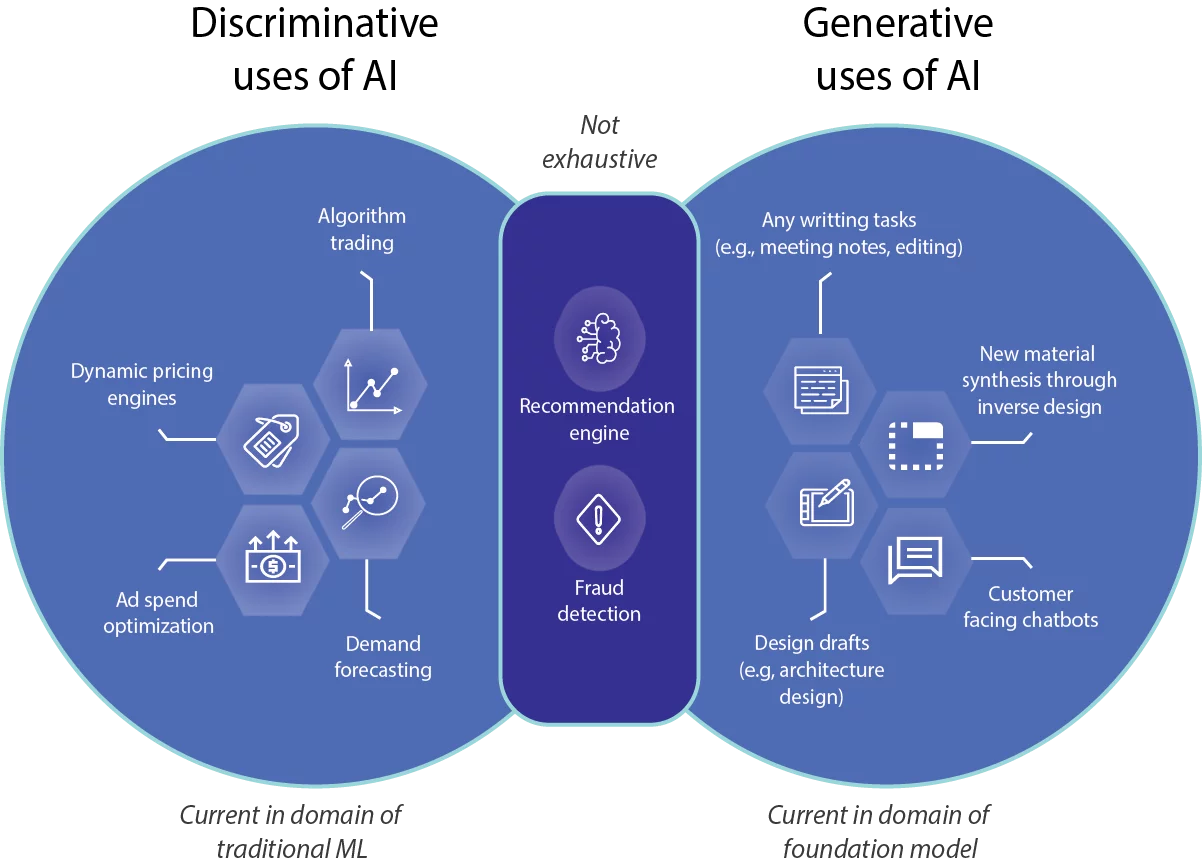

For example, such designs are educated, utilizing numerous examples, to forecast whether a certain X-ray reveals indications of a lump or if a specific borrower is most likely to back-pedal a funding. Generative AI can be taken a machine-learning version that is educated to create brand-new data, instead of making a prediction regarding a certain dataset.

"When it involves the actual machinery underlying generative AI and various other sorts of AI, the distinctions can be a little fuzzy. Oftentimes, the same algorithms can be made use of for both," claims Phillip Isola, an associate teacher of electrical engineering and computer technology at MIT, and a member of the Computer technology and Artificial Intelligence Lab (CSAIL).

One large distinction is that ChatGPT is far larger and a lot more intricate, with billions of parameters. And it has actually been trained on a massive amount of information in this case, a lot of the publicly available message online. In this big corpus of text, words and sentences show up in turn with specific dependencies.

It discovers the patterns of these blocks of message and uses this expertise to suggest what may come next off. While bigger datasets are one stimulant that led to the generative AI boom, a selection of significant research advancements likewise led to even more intricate deep-learning styles. In 2014, a machine-learning architecture called a generative adversarial network (GAN) was proposed by researchers at the College of Montreal.

The generator attempts to mislead the discriminator, and at the same time finds out to make even more sensible outputs. The image generator StyleGAN is based upon these sorts of models. Diffusion models were introduced a year later on by scientists at Stanford University and the College of The Golden State at Berkeley. By iteratively refining their output, these models find out to produce new information samples that appear like samples in a training dataset, and have actually been made use of to develop realistic-looking images.

These are just a couple of of several approaches that can be utilized for generative AI. What every one of these approaches have in usual is that they transform inputs right into a collection of tokens, which are numerical representations of chunks of data. As long as your data can be exchanged this criterion, token style, after that theoretically, you might use these approaches to produce brand-new data that look similar.

What Is Sentiment Analysis In Ai?

While generative models can attain amazing results, they aren't the ideal selection for all kinds of information. For jobs that entail making forecasts on structured data, like the tabular data in a spread sheet, generative AI designs have a tendency to be outperformed by conventional machine-learning approaches, states Devavrat Shah, the Andrew and Erna Viterbi Teacher in Electric Engineering and Computer System Science at MIT and a participant of IDSS and of the Lab for Info and Decision Equipments.

Previously, people needed to chat to devices in the language of machines to make points happen (AI in banking). Now, this interface has actually identified how to speak with both people and devices," states Shah. Generative AI chatbots are now being utilized in telephone call centers to area inquiries from human consumers, yet this application underscores one potential warning of executing these models worker variation

How Does Ai Create Art?

One appealing future instructions Isola sees for generative AI is its use for fabrication. Rather than having a design make a photo of a chair, possibly it might create a prepare for a chair that can be produced. He additionally sees future usages for generative AI systems in establishing extra usually smart AI representatives.

We have the capability to assume and fantasize in our heads, ahead up with intriguing concepts or strategies, and I think generative AI is among the tools that will certainly equip agents to do that, too," Isola says.

How Does Ai Impact Privacy?

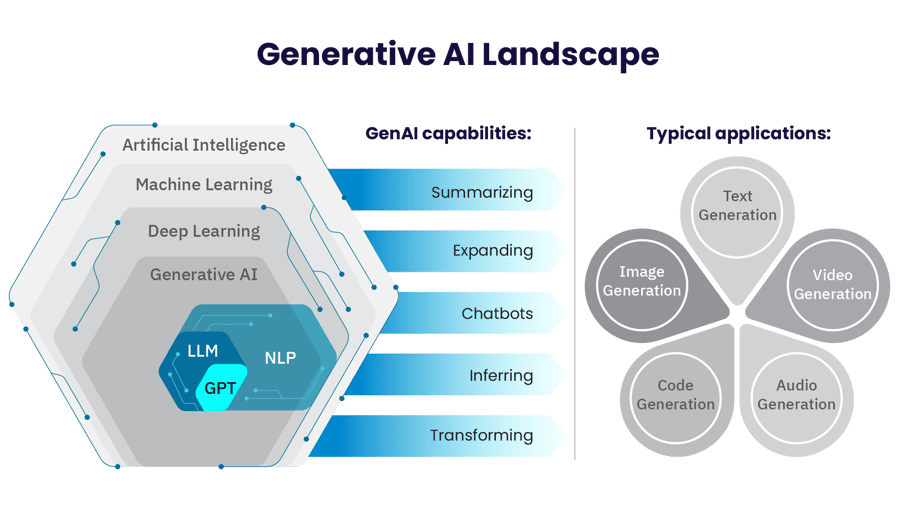

Two extra current advances that will certainly be talked about in even more detail below have played a vital component in generative AI going mainstream: transformers and the development language versions they enabled. Transformers are a kind of artificial intelligence that made it possible for researchers to educate ever-larger designs without having to identify all of the data in development.

This is the basis for tools like Dall-E that instantly produce photos from a text description or produce message subtitles from images. These innovations notwithstanding, we are still in the early days of utilizing generative AI to develop legible text and photorealistic elegant graphics. Early applications have had problems with precision and prejudice, along with being susceptible to hallucinations and spitting back weird solutions.

Moving forward, this technology can aid compose code, design brand-new drugs, develop items, redesign company procedures and transform supply chains. Generative AI starts with a timely that might be in the type of a message, a photo, a video clip, a style, musical notes, or any kind of input that the AI system can refine.

Scientists have actually been creating AI and other tools for programmatically generating material given that the very early days of AI. The earliest approaches, known as rule-based systems and later on as "experienced systems," made use of explicitly crafted guidelines for generating feedbacks or information collections. Neural networks, which create the basis of much of the AI and device discovering applications today, turned the trouble around.

Developed in the 1950s and 1960s, the initial neural networks were limited by an absence of computational power and small data sets. It was not until the arrival of big data in the mid-2000s and renovations in computer that semantic networks ended up being sensible for generating web content. The field sped up when researchers found a way to get neural networks to run in identical throughout the graphics processing devices (GPUs) that were being made use of in the computer gaming sector to make video games.

ChatGPT, Dall-E and Gemini (previously Poet) are preferred generative AI interfaces. Dall-E. Educated on a large data collection of images and their connected text summaries, Dall-E is an instance of a multimodal AI application that determines links across numerous media, such as vision, text and audio. In this case, it attaches the meaning of words to visual components.

Ai-powered Advertising

Dall-E 2, a second, much more capable variation, was released in 2022. It allows individuals to generate imagery in multiple designs driven by user prompts. ChatGPT. The AI-powered chatbot that took the world by tornado in November 2022 was constructed on OpenAI's GPT-3.5 execution. OpenAI has provided a way to connect and tweak text feedbacks through a chat user interface with interactive feedback.

GPT-4 was launched March 14, 2023. ChatGPT incorporates the background of its discussion with a user into its outcomes, mimicing an actual discussion. After the incredible popularity of the brand-new GPT user interface, Microsoft introduced a substantial brand-new financial investment right into OpenAI and integrated a variation of GPT into its Bing internet search engine.

Table of Contents

Latest Posts

Voice Recognition Software

Ai For Supply Chain

Intelligent Virtual Assistants

More

Latest Posts

Voice Recognition Software

Ai For Supply Chain

Intelligent Virtual Assistants